I’m busy testing the migration process from influx 1 to influx 2.

Version before upgrade: influxdb-1.12.2-3.x86_64

Version after upgrade: influxdb2-2.7.12-2.x86_64

Before migration I have a database with retention period 90 days called myowndata.

Relevant logs:

Migration copied data to: {“level”:“debug”,“ts”:1763645959.859166,“caller”:“upgrade/database.go:129”,“msg”:“Copying data”,“source”:“/var/lib/influxdb/data/myowndata/autogen”,“target”:“/var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen”}

{“level”:“debug”,“ts”:1763645960.0615513,“caller”:“upgrade/database.go:144”,“msg”:“Copying wal”,“source”:“/var/lib/influxdb/wal/myowndata/autogen”,“target”:“/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen”}

Migration log end:

{“level”:“info”,“ts”:1763645960.0618873,“caller”:“upgrade/database.go:195”,“msg”:“Database upgrade complete”,“upgraded_count”:2}

{“level”:“info”,“ts”:1763645960.0619164,“caller”:“upgrade/security.go:45”,“msg”:“Upgrading 1.x users”}

{“level”:“warn”,“ts”:1763645960.0619307,“caller”:“upgrade/security.go:50”,“msg”:“User is admin and will not be upgraded”,“username”:“ndewet”}

{“level”:“debug”,“ts”:1763645960.0619712,“caller”:“tenant/middleware_user_logging.go:49”,“msg”:“user find by ID”,“store”:“new”,“took”:0.000025285}

{“level”:“debug”,“ts”:1763645960.0619967,“caller”:“tenant/middleware_org_logging.go:48”,“msg”:“org find by ID”,“store”:“new”,“took”:0.000011586}

{“level”:“debug”,“ts”:1763645960.073645,“caller”:“upgrade/security.go:107”,“msg”:“User upgraded”,“username”:“telegraf”}

{“level”:“info”,“ts”:1763645960.0736895,“caller”:“upgrade/security.go:115”,“msg”:“User upgrade complete”,“upgraded_count”:1}

{“level”:“info”,“ts”:1763645960.0737052,“caller”:“upgrade/upgrade.go:488”,“msg”:“Upgrade successfully completed. Start the influxd service now, then log in”,“login_url”:“``http://localhost:8086``”}

V2 Engine startup reads from:

Nov 20 15:40:46 localhost influxd-systemd-start.sh[16275]: ts=2025-11-20T13:40:46.763397Z lvl=info msg=“Using data dir” log_id=0~KNmadG000 service=storage-engine service=store path=/var/lib/influxdb/engine/data

TSM files created during upgrade:

201327563 20672 -rw-r–r-- 1 influxdb influxdb 21167037 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/18/000000009-000000002.tsm

758107 20708 -rw-r–r-- 1 influxdb influxdb 21204362 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/19/000000009-000000002.tsm

67461582 20724 -rw-r–r-- 1 influxdb influxdb 21220185 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/20/000000009-000000002.tsm

135072339 20872 -rw-r–r-- 1 influxdb influxdb 21371102 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/21/000000009-000000002.tsm

201327567 20800 -rw-r–r-- 1 influxdb influxdb 21295731 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/22/000000009-000000002.tsm

758110 20784 -rw-r–r-- 1 influxdb influxdb 21281861 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/23/000000010-000000002.tsm

67461585 20784 -rw-r–r-- 1 influxdb influxdb 21280484 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/24/000000009-000000002.tsm

135072342 21020 -rw-r–r-- 1 influxdb influxdb 21522374 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/25/000000009-000000002.tsm

201327570 21020 -rw-r–r-- 1 influxdb influxdb 21520837 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/26/000000011-000000002.tsm

758113 18868 -rw-r–r-- 1 influxdb influxdb 19320716 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/27/000000010-000000002.tsm

67461588 20504 -rw-r–r-- 1 influxdb influxdb 20992814 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/28/000000009-000000002.tsm

135072345 21224 -rw-r–r-- 1 influxdb influxdb 21729577 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/29/000000010-000000002.tsm

201327575 21444 -rw-r–r-- 1 influxdb influxdb 21958196 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/30/000000009-000000002.tsm

758116 2612 -rw-r–r-- 1 influxdb influxdb 2672142 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/31/000000001-000000001.tsm

758117 2604 -rw-r–r-- 1 influxdb influxdb 2663576 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/31/000000002-000000001.tsm

758118 2600 -rw-r–r-- 1 influxdb influxdb 2661920 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/31/000000003-000000001.tsm

758119 32 -rw-r–r-- 1 influxdb influxdb 31733 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/31/000000004-000000001.tsm

758120 100 -rw-r–r-- 1 influxdb influxdb 99745 Nov 20 15:39 /var/lib/influxdb/engine/data/dbbedf296e09ccb1/autogen/31/000000005-000000001.tsm

WAL folders creating during upgrade (unfortunately I dont have full listing):

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/18

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/19

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/20

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/21

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/22

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/23

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/24

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/25

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/26

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/27

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/28

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/29

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/30

/var/lib/influxdb/engine/wal/dbbedf296e09ccb1/autogen/31

The upgrade process appears to complete successfully, as I can see my data in both influxv2 UI and choronograf connected to influxv2.

After 30 minutes of running, I see this in the logs:

Nov 20 16:10:47 localhost influxd-systemd-start.sh[16275]: ts=2025-11-20T14:10:47.889426Z lvl=info msg=“Deleting expired shard group (start)” log_id=0~KNmadG000 service=retention op_name=retention_delete_check op_name=retention_delete_expired_shard_group db_instance=dbbedf296e09ccb1 db_shard_group=2 db_rp=autogen op_event=start

Nov 20 16:10:47 localhost influxd-systemd-start.sh[16275]: ts=2025-11-20T14:10:47.894079Z lvl=info msg=“Deleted shard group” log_id=0~KNmadG000 service=retention op_name=retention_delete_check op_name=retention_delete_expired_shard_group db_instance=dbbedf296e09ccb1 db_shard_group=2 db_rp=autogen

Nov 20 16:10:47 localhost influxd-systemd-start.sh[16275]: ts=2025-11-20T14:10:47.894101Z lvl=info msg=“Group’s shards will be removed from local storage if found” log_id=0~KNmadG000 service=retention op_name=retention_delete_check op_name=retention_delete_expired_shard_group db_instance=dbbedf296e09ccb1 db_shard_group=2 db_rp=autogen shards=0,3

Nov 20 16:10:47 localhost influxd-systemd-start.sh[16275]: ts=2025-11-20T14:10:47.894108Z lvl=info msg=“Deleting expired shard group (end)” log_id=0~KNmadG000 service=retention op_name=retention_delete_check op_name=retention_delete_expired_shard_group db_instance=dbbedf296e09ccb1 db_shard_group=2 db_rp=autogen op_event=end op_elapsed=4.685ms

Output of influx bucket list:

ID Name Retention Shard group duration Organization ID Schema Type

6aeaee131cce724c _monitoring 168h0m0s 24h0m0s 6ff68fa7b44da352 implicit

72bb6f939da448e0 _tasks 72h0m0s 24h0m0s 6ff68fa7b44da352 implicit

2277a5667194434f default infinite 168h0m0s 6ff68fa7b44da352 implicit

dbbedf296e09ccb1 myowndata/autogen 2160h0m0s 168h0m0s 6ff68fa7b44da352 implicit

b4086d5b3683d537 telegraf/autogen infinite 168h0m0s 6ff68fa7b44da352 implicit

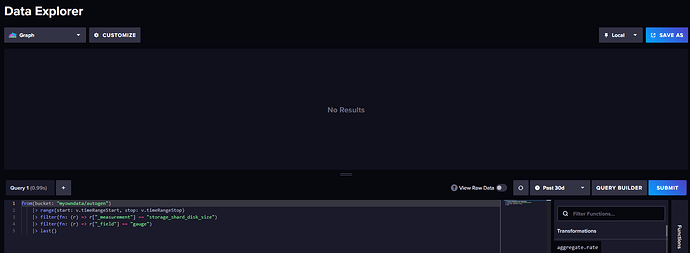

The data I could see in both chronograf and influxv2 ui is for 19 November 2025, so should not have been removed, but all the data has been deleted.

This is a repeatable process, as I’m using a vm that I can just roll back to the v1 state and repeat the process.

I would really appreciate any input or pointers as to what to check next, as I cannot have data disappearing when doing the upgrade in production.