Hi

have to say that I´m a beginner with influxdB and also have no real programming expertise so would be pleased to get some hints for improving bit by bit.

I´m using influxdB for a home automation system and sensor data is stored in it. Now I like to do some Queries and have it externally available in the automation system. I´ve already done this by using InfluxQL. For me it is similar to “normal” SQL Queries and together with a client like influxdb studio i was able to test and finally implement into the automation system.

Now I see thax Flux is much more powerful specialy by defining time ranges for queries. For example I like to use all data out of this month or the the month before based on the current point of date (now()). But I´m struggeling how to use and build Queries in Flux.

Out of this community and for example out of Grafana I see examples like this:

import “strings”

month = time(v: “${strings.substring(v: string(v: now()), start: 0, end: 8)}01T00:00:00Z”)

from(bucket: “piMeter”)

range(start: month)

filter(fn: (r) => r._measurement == “downsampled_energy” and r._field == >“sum_Gesamt”)

fill(value: 0.0)

aggregateWindow(every: 1d, fn:sum)

But Is this really the “phrase” I have to use for a Query? For influxQL I was able to make sth. like “Select …” as String workable from external system.

My I kindly ask if you can recommend how this would look like or is possible by using Flux?

Thank you very much in advance!

Edit: Is my understanding right, that i should investigate more in understanding how to query by the ‘influx query’ command? For me it seems to be the only option without external dedicated tool. Just sending query and receiving result?

I´m running influxdB 1.8.3 at a raspberry pi.

Hello @Pete0815,

I’m sorry I’m having trouble understanding what exactly it is that you want to do?

If you want to query the last month of data all you have to do is:

from(bucket: "my-bucket")

|> range(start: -1mo)

If this isn’t what you want, here is a complete list of Flux functions for manipulating timestamps and time with influxdb:

As for tools to query influxDB. I highly recommend using the InfluxDB UI and the Query Builder.

Can you explain a little more what you’re looking to do?

Thank you

Hello @Anaisdg,

that you very much for your helpful reply and many hints. This topic I wrote 7 days ago and this is very much for a beginner in influxDB to improve/understand

According your recommendation, today I had my first success by executing a query in flux by using influxDB CLI.

Now I have to improve and get closer to my goal which is to have a query for a data range for today, yesterday, this month and last month. Challange for me is yesterday and last month. Because this is a relative period but no direct usage of now(). Guess I´ll have to build sth. using >=date.month(now) -1 < date.month(now) - 2 to query for last month.

I will have to investigate but I´m confident to do so. Switching from influxQL to Flux seems to be the only right decision.

Thanks also for recommending InfluxDB UI and guess haven´t used it before and will have a look.

To be honest having new problems to solve and do not know how.

Problem 1: Downsampling + Aggregation by Continuous Query is producing a mistake because of the time stamp which is used by aggregations like integral(). see topic.

Second topic. Why does this query work well:

influx -type=flux -path-prefix /api/v2/query -username user -password 123 -execute ‘from(bucket:“longterm”) |> range(start: -5h) |> filter(fn: (r) => r._measurement == “javascript.0.scriptEnabled.PV.WRPACges”) |> integral()’

And this query gives back an error:

influx -type=flux -path-prefix /api/v2/query -username user -password 123 -execute ‘from(bucket:“longterm”) |> range(start: -5h) |> filter(fn: (r) => r._measurement == “javascript.0.scriptEnabled.PV.WRPACges”) |> sum()’

Only difference is using sum() instead of integral(). Without using aggegation the output is a list of 5 datapoints each having a float value. The result for integral is right but i need sum(). sum() gives error:

unknown server error: 500 Internal Server Error

I do not understand why…

Thank you very much!

Hello @Pete0815,

Problem 1:

What do you mean by mistake because of the timestamp? Which timestamp would you like to use? Can you please provide a numerical example?

Problem 2:

I’m not sure why that isn’t working. Can you please share some of your data with me? You can export a small out with the UI easily. Not to be too redundant, but I think using the UI might help you debug some of your Flux.

Here are some blogs that could be useful to you:

Here are all of my blogs in case there are others that are useful as well:

https://www.influxdata.com/blog/author/anais/

Dear @Anaisdg,

Thank you very much and will try to export some data and come back. For problem 1 hope this example is useful:

04.12.2020 14:00h 0W

04.12.2020 14:30h 100W

04.12.2020 15:00h 100W

I´m trying to aggregate/downsample by integral() for 1h 14-15h and my expectation for the result is:

04.12.2020 15:00h 75Wh

But influxDB is giving back:

04.12.2020 14:00h 75Wh

This is problematic because of timestamp. InfluxDB is using timestamp of beginning (14h) of the integral period but the electric energy calculated by the integral is for the timestamp at 15h.

Have started to think if duplicate function for the timestamp can bring a sulution. But I´m at the beginning of understanding and testing.

2020-12-04-16-50 Chronograf Data.csv.gz (16.6 KB)

Hello @Anaisdg,

had a big improvement. Think influxDB UI is only available for v2.0 right? So installed Chronograf and this is very big help for developing Queries in influxQL but in addition also in FLUX. Please find attached a data export from my “shortterm” database for 3h.

The values are in W(att).

My final goal is to be able to have a longterm database grouped by(15m) which gives values in kWh.

Question is if i have to use (v1.8.3) Continuous Queries or am I also able to use Tasks?

Continuos Queries do calculate the integral right but I have the problem with the timestamp (as given example before/above).

Therefore I startet to develop in Flux and beginning with simple Queries like:

influx -type=flux -path-prefix /api/v2/query -username user -password xyz -execute ‘from(bucket:“longterm”)

|> range(start: -1h)

|> filter(fn: (r) => r._measurement == “javascript.0.scriptEnabled.PV.WRPACges”)

|> integral()’

Within this development process I recognized that integral() is working but sum() producing an error. Now I changed bucket from longterm to shortterm database and integral is also producing error. So guess this is based on wrong usage of aggegation by me. Haven´t had a closer look how timestamp can be handled and if this is working better than in influxQL for me.

Nevertheless I´m looking for a solution to downsample data (continuous query or task) by integral for filling a longterm database. Within that filling of longterm database the timestamp should be based on the Last Timestamp used in the intergral time period.

mmmh long story…sorry

Hello @Pete0815,

You can use the timeshift() function to shift the timestamp to the end of the integral.

I’m not sure why the sum() isn’t working. What error are you getting?

Hello @Anaisdg,

Thx!

For this Query:

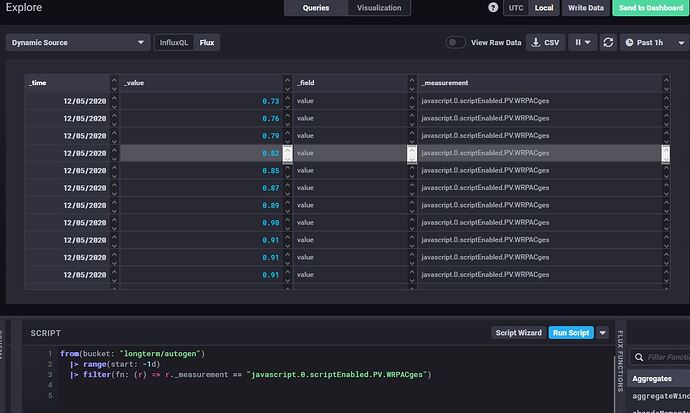

from(bucket: “longterm/autogen”)

|> range(start: -1d)

|> filter(fn: (r) => r._measurement == “javascript.0.scriptEnabled.PV.WRPACges”)

|> sum()

Getting in chronograf: Error: panic: runtime error: invalid memory adress or nil pointer dereference

Maybe it´s because the longterm database is filled by CQ.

Without sum() structure is looking like this:

Found a topic in this forum which caused issues of sum error by automatic grouping of influxdb by tag.

So I tried as recommended in this topic:

from(bucket: “longterm/autogen”)

|> range(start: -1d)

|> filter(fn: (r) => r._measurement == “javascript.0.scriptEnabled.PV.WRPACges”)

|> keep(columns:[“_value”])

|> sum()

But without success and same error as before.

Hello @Pete0815,

Is there anyway you can send me some of your data before the sum() so I can try on my end?

Thank you

Hello @Anaisdg

Thx, of course and please find attached data export for 3h before sum().

2020-12-08-11-35 Chronograf Data.csv.gz (525 Bytes)

Error of sum() is looking like this: